The move to competency-based and performance improvement continuing education in the health professions means that we need to be increasingly aware of the roles that organizational culture, stakeholder perspectives, and clinical settings play in learning and behavioral or practice change. Qualitative research is a critical tool educators can use to gather valuable insight about these and other factors, especially as CEHP begins to align with performance and quality improvement initiatives. In this article, we explore frequently asked questions that CEHP educators have regarding the effective use of qualitative research.

FAQ 1: What exactly is qualitative research?

Qualitative research in healthcare refers broadly to methods used to collect and analyze textual or image-based data in order to make sense of behavioral and practice issues in the clinical setting. We can also use qualitative research to make sense of program evaluation responses. There are some important differences between qualitative and quantitative research. Instead of using statistical techniques to analyze numerical data, qualitative analysis uses structured, interpretive steps to analyze text or images. In addition, qualitative research doesn’t usually test a hypothesis or lead to generalizations, but instead focuses on the complexity of meanings that participants bring to their experience of clinical practice and to the context in which practice occurs. You can also use qualitative methods to generate a hypothesis that could subsequently be tested by a quantitative method, such as a survey.

There are some important differences between qualitative and quantitative research. Instead of using statistical techniques to analyze numerical data, qualitative analysis uses structured, interpretive steps to analyze text or images. In addition, qualitative research doesn’t usually test a hypothesis or lead to generalizations, but instead focuses on the complexity of meanings that participants bring to their experience of clinical practice and to the context in which practice occurs. You can also use qualitative methods to generate a hypothesis that could subsequently be tested by a quantitative method, such as a survey.

FAQ 2: I have mountains of responses to open-ended questions from program evaluations that I think are full of valuable insight, but I don’t know what to do with all this text.

You need a strategy for bringing order to chaos! Typically, CEHP practitioners list responses to open-ended questions in program evaluations. Yet written or oral responses to evaluation questions offer so much more. They provide rich detail that you can use to develop a more complete description of how and why healthcare providers do what they do, or think what they think.

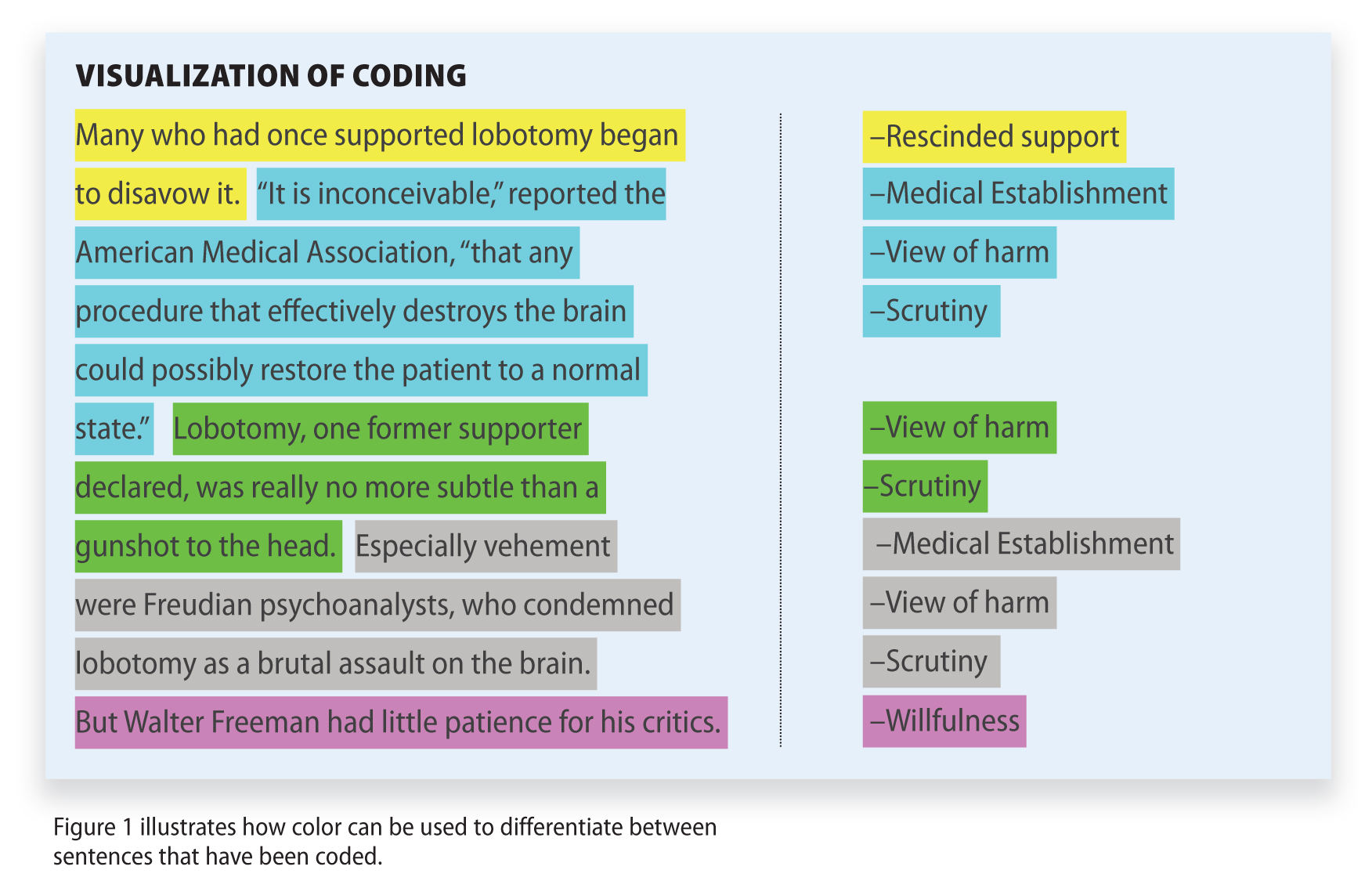

Unlike quantitative analysis, the qualitative approach is iterative. You can start analysis—thinking about and coding data—right away. Coding is just a way of breaking the text you have—responses to evaluation questions, interviews with key opinion leaders or patients, or even hospital policy documents—into discrete units, and grouping these units according to their characteristics. In practice, coding involves tagging words, phrases, or sentences that reflect particular themes to build a picture of what’s going on from the ground up. In fact, grounded theory is one of the main approaches to qualitative analysis. Taking a grounded approach to analyzing data simply means that you are building insights about the issues that are important to learners that are derived from what they tell you. To do this, you need to keep an open mind about what learners are saying, rather than assume you already know what their issues are, or judging what they say in terms of what they should say.

Unlike quantitative analysis, the qualitative approach is iterative. You can start analysis—thinking about and coding data—right away. Coding is just a way of breaking the text you have—responses to evaluation questions, interviews with key opinion leaders or patients, or even hospital policy documents—into discrete units, and grouping these units according to their characteristics. In practice, coding involves tagging words, phrases, or sentences that reflect particular themes to build a picture of what’s going on from the ground up. In fact, grounded theory is one of the main approaches to qualitative analysis. Taking a grounded approach to analyzing data simply means that you are building insights about the issues that are important to learners that are derived from what they tell you. To do this, you need to keep an open mind about what learners are saying, rather than assume you already know what their issues are, or judging what they say in terms of what they should say.

It’s helpful to ask yourself three questions when you are looking at qualitative data for the first time:

What’s happening?

What’s important?

What patterns are emerging?

FAQ 3: What is the advantage of digging deeper, rather than just listing responses to open-ended questions?

Situated learning theories and systems thinking suggest that learning, and behavioral and practice changes, occur within a complex organizational system comprised of multiple actors and communities of practice with various roles, identities, and responsibilities. Qualitative research is uniquely positioned to help you explore, in-depth, the context of education interventions, the perspectives of multiple stakeholders who are likely to be affected by an education intervention, and the social and cultural filters that influence clinical practice. In short, you can get a clearer picture of what matters in the everyday lives of clinicians, and develop insight into shared or discordant understandings between professional groups.

Qualitative Research in Practice: There is considerable evidence that differences in the experiences and perspectives of clinicians can impact healthcare quality, patient safety, and clinical practice. It might be important to understand the strength and implications of these perspectives when you are planning education interventions designed to optimize interdisciplinary learning or team-based care. Or it could be important to understand why one group of learners in your educational activity had a more positive experience than another. In both examples, qualitative analysis can help you develop insight you can use to inform the next stage of your education planning process.

By analyzing qualitative data you are in a better position to:

• Identify what really matters to education participants;

• Detect obstacles to changing clinical performance; and

• Explain why learning and/or performance improvement does or does not occur.

FAQ 4: What does the term “mixed methods” mean?

Mixed methods refer to any combination of quantitative and qualitative research methods. For instance, running a focus group could help you develop the optimal “a” through “e” answer choices in a multiple-choice survey measure. Or a research team may use quantitative chart-based data reviews in collaboration with patient interviews in order to get a more textured picture of reported patient outcomes and patient-reported outcomes. Oftentimes qualitative data can help tell the “story behind the numbers.” Through combining the methodologies, you can benefit from both the validity of quantitative analysis (answering the “what”) as well as the depth of understanding of qualitative analysis (answering the “how” and “why”).

FAQ 5: Where in the CEHP lifecycle might qualitative analysis be appropriate?

Regardless of which planning model you adopt (e.g., Analysis-Design-Develop-Implement-Evaluation, Plan-Do-Study-Act, Six Sigma, or Lean) analysis of qualitative data can pay dividends across the CEHP planning process.

• Initial planning phase: To identify education need and gaps and to build up a detailed picture of organizational processes, relationship dynamics, and other factors that might contribute to (or undermine) readiness for learning or for change.

• Design phase: To identify barriers and enablers to learning and to the application of learning to practice. Understanding the cultural and operational contexts of interventions can enable educators to customize activities appropriately and enhance translation of practitioner knowledge into practice.

• Program implementation/formative evaluation: To acquire early feedback regarding the effectiveness of an intervention. Generate deeper insights into what participants/learners are really thinking and feeling about the education program and how they can use what they’ve learned in clinical practice.

• Program evaluation: Use qualitative analysis to generate context-sensitive outcomes measures.

FAQ 6: How do I ensure that my interpretation of what’s important in evaluation responses is reliable?

There are strategies and resources you can use to ensure that your analysis is rigorous (Figure 2). For instance, software programs, such as NVivo, AtlasTi, and Dedoose, can help you manage and code data in systematic steps that anyone can subsequently see and review. These programs help you organize and categorize; annotate textual, visual, or audio data; and search for themes, relationships, patterns, and other information in a systematic and efficient fashion. Another advantage of software is that you can create an audit trail so that other members of your team can work on the analysis too, and test out your interpretation. This kind of analytic triangulation is a way to ensure rigor and reduce interpretative bias. You can even have more than one analyst code the same body of data and compare inter-rater reliability.

There are strategies and resources you can use to ensure that your analysis is rigorous (Figure 2). For instance, software programs, such as NVivo, AtlasTi, and Dedoose, can help you manage and code data in systematic steps that anyone can subsequently see and review. These programs help you organize and categorize; annotate textual, visual, or audio data; and search for themes, relationships, patterns, and other information in a systematic and efficient fashion. Another advantage of software is that you can create an audit trail so that other members of your team can work on the analysis too, and test out your interpretation. This kind of analytic triangulation is a way to ensure rigor and reduce interpretative bias. You can even have more than one analyst code the same body of data and compare inter-rater reliability.

FAQ 7: Are there specific strategies for reporting the results of a qualitative analysis?

There are all sorts of neat things you do to tell the story embedded in your evaluation responses or to convey trends and relationships in qualitative data. For instance, visual maps, charts, and models show how ideas expressed in individual responses are part of a larger pattern, or you can use word clouds to show the magnitude of a theme or issue. Sometimes, all you need is a juicy quote to illustrate a particular issue. This tactic is especially effective if distinct perspectives are represented in the data.

Intrigued but not quite ready to jump into qualitative research? The British Medical Journal and the Journal of the American Medical Association periodically publish ‘how-to’ articles on qualitative research that are good resources for beginners, and the American Evaluation Association regularly runs training courses on using qualitative methodologies in program evaluation. Finally, the Alliance for Continuing Education in the Health Professions will present a webinar on qualitative analysis in continuing education in January. Look for details on ACEHP.org.

Alexandra Howson, MA (Hons), PhD, CCMEP, owner, Thistle Editorial LLC, is an experienced qualitative researcher and educator (Universities of Edinburgh, Aberdeen, and UC Berkeley Extension), a medical sociologist with an international peer-review publication record, and an author of several books, including widely used university texts. Through Thistle Editorial, she develops content to support CEHP, quality improvement, and patient safety initiatives. Reach her at [email protected].

Wendy Turell, DrPH, CCMEP, president of Contextive Research LLC, works